For a while I have been integrating Terraform resource deployment of Azure VMs with Azure Desired State Configuration inside of them (previous blog post).

Over time my method for deploying the Azure resources to support the DSC configurations has matured into a PowerShell script that checks and creates per-requisites, however there was still a bunch of manual effort to go through, including the creation of the Automation Run-As account.

This was one of the first things I started building in an Azure DevOps pipeline; it was a good idea having now spent a bunch of time getting it working, and learned a bunch too.

Some assumptions are made here. You have:

- an Azure DevOps organization to play around with

- an Azure subscription

- the capability/authorization to create new service principals in the Azure Active Directory associated with your subscription.

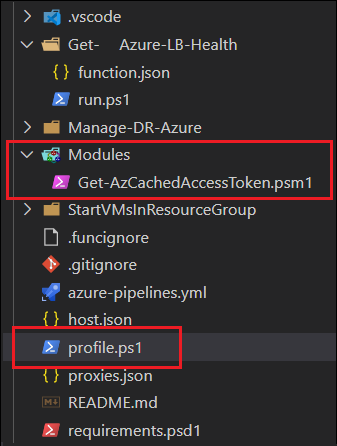

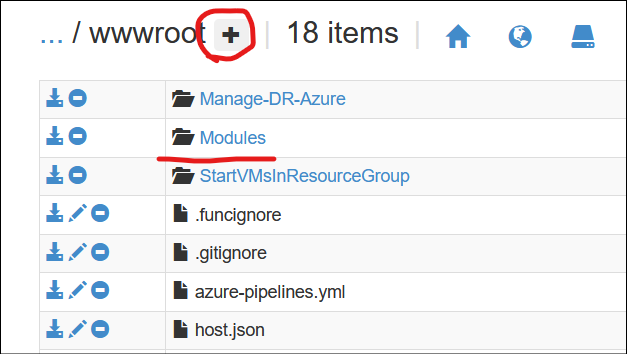

You can find the GitHub repository containing the code here. with the README file containing the information required to use the code and replicate it.

A few of the key considerations that I wanted to include (and where they are solved) were:

- How do I automatically create a Run-as account for Azure Automation, when it is so simple in the portal (1 click!)

- Check the New-RunAsAccount.ps1 file, which gets called by the Create-AutomationAccount.ps1 script in a pipeline

- Azure Automation default modules are quite out of date, and cause problems with using new DSC resources and syntax. Need to update them.

- Create-AutomationAccount.ps1 grabs the latest Update-AutomationAzureModulesForAccount runbook, imports it, publishes it, and then runs it

- I make use of Az PowerShell in runbooks, so I need to add those modules, but Az.Accounts is a pre-req for the others, so it must be handled differently

- Create-AutomationAccount.ps1 has a section to do this for Az.Accounts, and then a separate function that is called to import any other modules from the gallery that are needed (defined in the parameters file)

- Want to use DSC composites, but need a mechanism of uploading that DSC module as an Automation Account module automatically

- A separate pipeline found on “ModuleDeploy-pipeline.yml” is used with tasks to achieve this

- Don’t repeat parameters between scripts or files – one location where I define them, and re-use them

- See “dsc_parameters.ps1”, which gets dot-sourced in the scripts which are directly called from pipeline tasks

Most importantly, are the requirements to get started, being:

- An Azure Subscription in which to deploy resources

- An Azure KeyVault that will be used to generate certificates

- An Azure Storage Account with a container, to store composite module zip

- An Azure DevOps organization you can create pipelines in

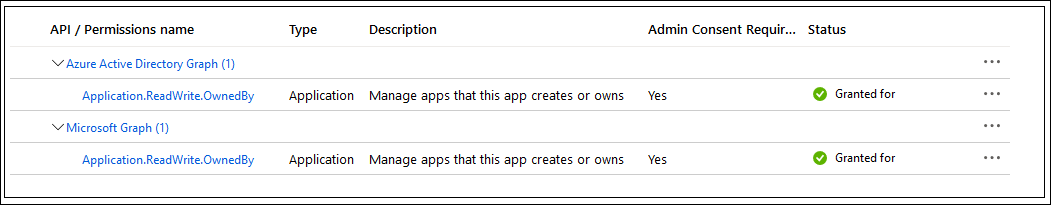

- An Azure Service Principal with the following RBAC or API permissions: (so that it can itself create new service principals)

- An Azure DevOps Service Connection linked to the Service Principal above

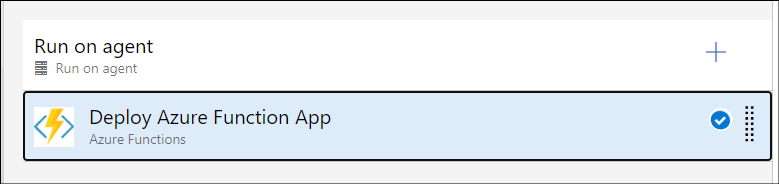

The result is that we have two different pipelines which can do the following:

- ModuleDeploy-pipeline.yml pipeline runs and

- takes module from repository and creates a zip file

- uploads DSC composite module zip to blob storage

- creates automation account if it doesn’t exist

- imports DSC composite module to automation account from blob storage (with SAS)

- azure-pipelines.yml pipeline runs and:

- creates automation account if it doesn’t exist

- imports/updates Az.Accounts module

- imports/updates remaining modules identified in parameters

- creates new automation runas account (and required service principal) if it doesn’t exist (generating an Azure KeyVault certificate to do so)

- performs a ‘first-time’ run of the “Update-AutomationAzureModulesForAccount” runbook (because automation account is created with out-of-date default modules)

- imports DSC configuration

- compiles DSC configuration against configuration data