Myself and a co-worker just completed an upgrade of our 2-node Server 2012 Hyper-V cluster to a 3-node Server 2012 R2 cluster, and it went very smoothly.

I’ve been looking forward to some of the improvements in Hyper-V 2012 R2, in addition to a 3rd node which is going to be the basis for our Citrix XenApp implementation (with an nVIDIA GRID K1 GPU).

I’ve posted before about my Hyper-V implementation which was done using iSCSI as the protocol but direct connections rather than through switching, since I only had 2 hosts.

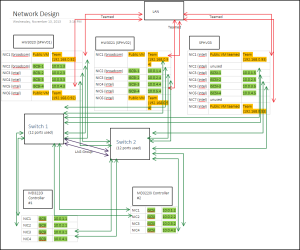

For this most recent upgrade I needed to add a 3rd host, which meant a real iSCSI SAN. Here’s the network design I moved forward with:

This time I actually checked compatibility of my hardware before proceeding, and found no issues to be concerned about.

The process for the upgrade is described below, which includes the various steps required when 1) renaming hosts in use with MD3220i, and 2) converting to iSCSI SAN instead of direct connect:

Before maintenance window

- Install redundant switches in the rack (I used PowerConnect 5548’s)

- Live Migrate VMs from Server1 to Server2

- Remove Server1 from Cluster membership (Evict Node)

- Wipe and reinstall Windows Server 2012 R2 on Server1

- Configure Server1 with new iSCSI configuration as documented

- Re-cable iSCSI NIC ports to redundant switches

- Create new Failover Cluster on Server1

- From Server1 run “Copy Cluster Roles” wizard (previously known as “Cluster Migration Wizard”)

- This will copy VM configuration, CSV info and cluster networks to the new cluster

Within maintenance window

- When ready to cut over:

- Power down VM’s on Server2.

- Make CSVs on original cluster Offline

- Power down Server2

- Remap host mappings for each server in Modular Disk Storage Manager (MDSM) to “unused iSCSI initiator” after rename of host, otherwise you won’t find any available iSCSI disks

- Reconfigure iSCSI port IP addresses for MD3220i controllers

- Add host to MDSM (for new 3rd node)

- Configure iSCSI Storage on Server1 (followed this helpful guide)

- On Server1, make CSV’s online

- Start VMs on Server1, ensure they’re online and working properly

At this point I had a fully functioning, single-node cluster within Server 2012 R2. With the right planning you can do this with 5-15 minutes of downtime for your VMs.

Next I added the second node:

- Evict Server2 from Old Cluster, effectively killing it.

- Wipe and reinstall Windows Server 2012 on Server2

- Configure Server2 with new iSCSI configuration as documented

- Recable iSCSI NICs to redundant switches

- Join Server2 to cluster membership

- Re-allocate VMs to Server2 to share the load

I still had to reset the preferred node and failover options on each VM.

Adding the 3rd node followed the exact same process. The Cluster Validation Wizard gave a few errors about the processors not being the exact same model, however I had no concerns there as it is simply a newer generation Intel Xeon.

The tasks remaining for me are to upgrade the Integration Services for each of my VMs, which will require a reboot so I’m holding off for now.