I had previously blogged about my SC847 backup storage array, and how I’m contemplating using Windows Server 2012 Storage Spaces to manage the storage in a redundant way.

Yesterday I began setting that up, and it was very easy to configure. My only complaint through the process is that there isn’t much information on what the implications of your choices are (such as Simple, Mirror or Parity virtual disks) when you’re actually making that choice.

Since this storage was just being set up, I decided to familiarize myself with the failover functions of the storage spaces.

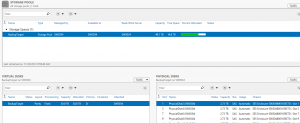

Throughout this process I set up a storage space and virtual disk, and then removed a hard drive to see what would happen. What I observed was that both the storage space and virtual disk went into an alert state, and the physical disk list showed the removed disk as “Lost Communication”.

I wiped away all of the configurations and recreated, this time with a hot spare. When I performed the same test of pulling a hard drive, I expected the hot spare to immediately recalculate parity on my virtual disk but this didn’t happen.

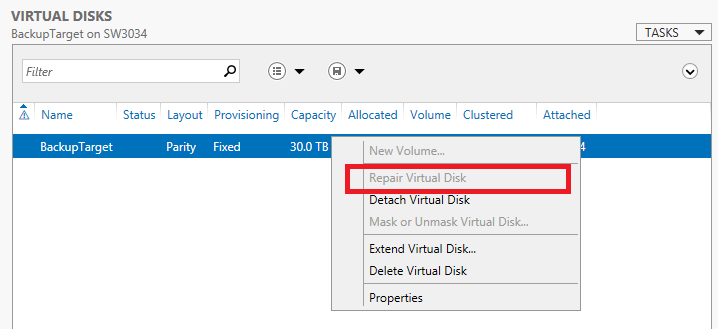

Right clicking the virtual disk and choosing “repair” did force the virtual disk to utilize the hot spare though.

While attempting to figure out the intended behavior, I came across this blog post by Baris Eris detailing the hot spare operation in depth. I won’t repeat everything here; instead I highly recommend you read what Baris has written as it is excellent.

One thing I will noted is that I also had to use the powershell command to switch the re-connected disk to a hot spare, but after doing that the red LED light on my SC847 was lit until I power cycled the unit.

The end result for me is that the behavior of the hot spare in storage spaces will work, as long as documentation is in place for staff to understand how it works, and when manual intervention is necessary.