Today I am learning about using docker-compose to run a simple dotnet core Blazor server app, and I hit a snag.

For various reasons I won’t detail right now, I want my docker container to serve my app up over HTTPS, and this requires a bit of extra configuration for dotnet core.

After producing a certificate, I managed to get my container running with a a “docker run”, like this:

docker run --rm -p 44381:443 -e ASPNETCORE_HTTPS_PORT=44381 -e ASPNETCORE_URLS="https://+;http://+" -e Kestrel__Certificates__Default__Path=/https/aspnetapp.pfx -e Kestrel__Certificates__Default__Password=password -v $env:USERPROFILE\.aspnet\https:/https/ samplewebapp-blazor

version: "3.8" services: web: image: samplewebapp-blazor ports: - "44381:443" environment: - COMPOSE_CONVERT_WINDOWS_PATHS=1 - ASPNETCORE_HTTPS_PORT=44381 - ASPNETCORE_URLS="https://+;http://+" - Kestrel__Certificates__Default__Password="password" - Kestrel__Certificates__Default__Path="/https/aspnetapp.pfx" volumes: - "/c/Users/jeff.miles/.aspnet/https:/https/" |

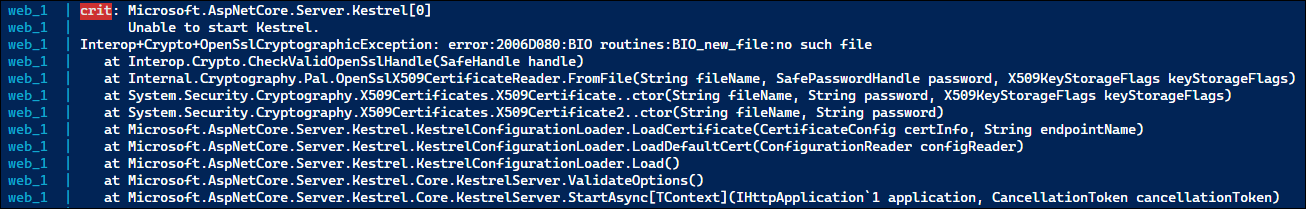

crit: Microsoft.AspNetCore.Server.Kestrel[0] web_1 | Unable to start Kestrel. web_1 | Interop+Crypto+OpenSslCryptographicException: error:2006D080:BIO routines:BIO_new_file:no such file

My first thought was, “That’s got to be referring to the certificate – I must not have the volume syntax correct, and it isn’t mounted”. So I messed around with a bunch of different ways of specifying the local mount point, investigated edge cases with WSL2 and Docker Desktop, and wasted about 45 minutes with no results.

So I tagged in my buddy Matthew for his insight, and his first suggestion was “is it actually mounted?” In order to check, I had to get the container to run with docker-compose, so I commented out the environment variables for ASPNETCORE_URLS, and the Kestral values. This allowed the container to run, although I couldn’t actually hit the web app.

Then I was able to do: “docker exec -it containername bash”

Using this I could browse the filesystem, and verify the volume was mounted and the certificate was present.

Within that bash prompt, I manually set the environment variables, and then re-ran dotnet with the same entrypoint command as what builds my docker image. Surprisingly, the application loaded up successfully!

This tells me the volume is good, but something’s wrong with the passed-in variables.

First, I tried taking the quotes off the value of the Kestrel__Certificates__Default__Path variable. But then docker-compose gave me this error:

web_1 | crit: Microsoft.AspNetCore.Server.Kestrel[0] web_1 | Unable to start Kestrel. web_1 | System.InvalidOperationException: Unrecognized scheme in server address '"https://+""'. Only 'http://' is supported.

I decided to remove all quotes from all environment variables (as a shot in the dark), and again surprisingly, it worked!

A bit of internet sleuthing later, and Matthew had produced this GitHub issue as explanation of what was going on.

Because I was wrapping the environment variables in quotes, they were actually getting injected into the container with quotes!

Here’s the end result of my compose file:

version: "3.8" services: web: image: samplewebapp-blazor ports: - "44381:443" environment: - COMPOSE_CONVERT_WINDOWS_PATHS=1 - ASPNETCORE_HTTPS_PORT=44381 - ASPNETCORE_URLS=https://+;http://+ - Kestrel__Certificates__Default__Password=password - Kestrel__Certificates__Default__Path=/https/aspnetapp.pfx volumes: - "/c/Users/jeff.miles/.aspnet/https:/https/" |

It looks like as of docker-compose 1.26 (out now) that if you need quotes around environment variable values, you should use a .env file, which will work properly.