Azure Automation runbooks are very useful – particularly for scheduled or repeated operations. One downside I have observed is that they are very often disconnected from proper version control.

Microsoft has a solution for this: source control integration

You can connect a GitHub/Azure Repo to an Automation Account, which uses resources like RunAs accounts, personal access tokens (from your repo), and automation jobs to perform the sync.

I began evaluating this for the purposes of importing, publishing, and scheduling some runbooks, however there were two key reasons I felt a different direction was better suited:

- Flexibility – I could only sync a folder named “runbooks”, and only import and publish them. What if I only wanted a certain selection of runbooks? What if I wanted to segregate runbooks between different Automation Accounts? What if I wanted to manage my schedule definition as code too? Sure there are solutions to these problems, but then they become workarounds or addons to the source control integration.

- Consistency – other areas of ensuring version controlled code is released to some environment typically use continuous deployment tools; why would I treat my runbooks any different? If I’m already going to be using Azure DevOps pipelines for these areas, I want to keep that consistency going.

There are a few different ways that runbook management could be accomplished in an Azure DevOps pipeline: Azure PowerShell, ARM templates, Azure CLI.

For simplicity and speed of development, I chose to use Azure PowerShell, combined within a YAML pipeline definition.

My use-case here is taking my Update Management scripts and making them run automatically on a schedule. Based on that, I have the following structure in my repository:

PowerShell (Folder)

Update Management (Folder)

azure-pipelines.yaml

Set-UpdateManagementSchedule.ps1

Get-AzCachedAccessToken.ps1

New-UpdateManagementSchedule.ps1

Publish-AARunbookFromDevOps.ps1

New-EmailDelivery.ps1

Set-UpdateManagementSchedule.ps1 defines some time zones, and then iterates over them calling New-UpdateManagementSchedule.ps1 for each. At the end, it uses New-EmailDelivery.ps1 to send results by email.

New-UpdateManagementSchedule.ps1 calls Get-AzCachedAccessToken.ps1 in order to use an existing Az PowerShell context to create a bearer token and authenticate against Azure REST API with it (where I’m using Update Management endpoints).

Publish-AARunbookFromDevOps.ps1 is my script that gets called within the DevOps pipeline, to import and publish the runbooks to the automation account.

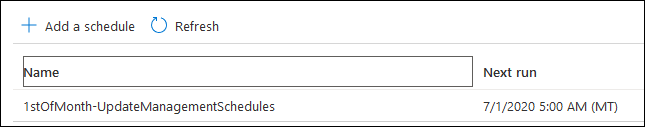

I want Set-UpdateManagementSchedule.ps1 to run once per month, because it calculates the appropriate timing of Update Management schedules. I don’t want any of the other scripts to have a schedule at all, since they’re just referenced at some point in time.

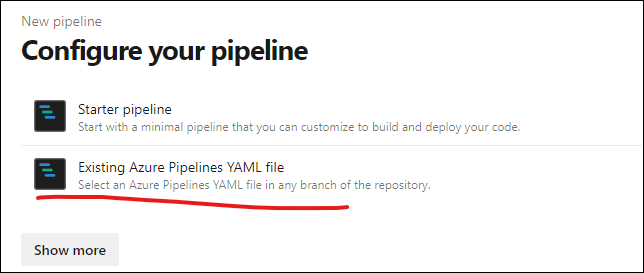

In order to make this all happen, I created a DevOps pipeline, stored within the “azure-pipelines.yaml” file.

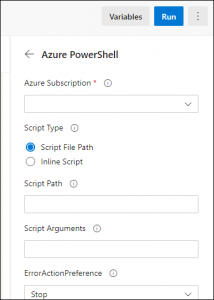

The concept of storing the pipeline as code within YAML is very advantageous, however difficult to simply write from scratch. Often I will create a dummy pipeline in the GUI, using the Task Assistant (see below) and Azure DevOps task docs in order to understand the syntax and components of what I’m trying to achieve.

I can then take that YAML that has been progressively built, store it in my repository, and create a fresh pipeline and import using it.

In this case, my pipeline starts with a trigger definition, for which I learned and utilized path-based rules that Azure Repo supports:

trigger: branches: include: - master paths: include: - PowerShell/UpdateManagement/* |

This will ensure the pipeline only triggers when a runbook within the UpdateManagement folder is modified, rather than my whole repository.

Using the AzurePowerShell task, I can trust that authentication to Azure will be handled appropriately as long as I supply a service connection (shown as the “azureSubscription” property below).

- task: AzurePowerShell@5 inputs: azureSubscription: 'automation-rg' #This is the devops service connection name ErrorActionPreference: 'Stop' FailOnStandardError: true ScriptType: 'FilePath' ScriptPath: './PowerShell/UpdateManagement/Publish-AARunbookFromDevOps.ps1' ScriptArguments: -runbookname Get-AzCachedAccessToken ` -runbookfile ./PowerShell/UpdateManagement/Get-AzCachedAccessToken.ps1 #` #-scheduleName $(userPassword) azurePowerShellVersion: 'LatestVersion' displayName: Deploy AA Runbook - Get-AzCachedAccessToken |

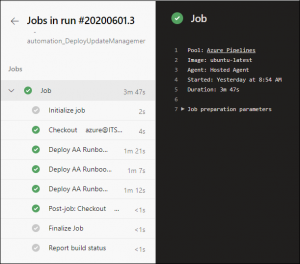

For each Runbook that I want to handle in my pipeline, I’m calling the “Publish-AARunbookFromDevOps.ps1” script and supplying arguments related to that specific runbook. I wanted to break it out this way to give me the most flexibility to handle runbooks independently.

This script that is called is fairly static right now; I’m making a bunch of hard-coded assumptions such as “we will always overwrite the runbook if it exists rather than comparison checking”, and “every import needs a publish”, and “only 1 kind of schedule is going to be used”.

Limiting the schedule to my hard-coded values reduced the time-to-deploy this in my environment, because I didn’t have a need right now for further manipulation. This idea is related to “YAGNI” or “You aren’t going to need it” that I recently read about from Martin Fowler.

When the pipeline runs, it performs all it’s tasks in about 3:30 minutes

I can see that it has created a Schedule in my automation account, and linked it to the runbook:

Check out the README.md from the GitHub repo for details on re-using this code for yourself.

awesome Jeff, ive been bashing my head against this for days trying to get the pipeline to trigger the sync, the sync works but the yaml/pipeline is my issue, can wait to give it a go now i found these