Yesterday I learned something new; it’s possible for a switch to stop operating as a switch, and start flooding all unicast packets out every interface. This is something I just solved on a Dell PowerConnect 5548 switch.

In retrospect, this happened a few months ago too, but at the time I couldn’t spend any time troubleshooting, and rebooting the switch resolved the problem. This time I wanted to get to the source of the problem.

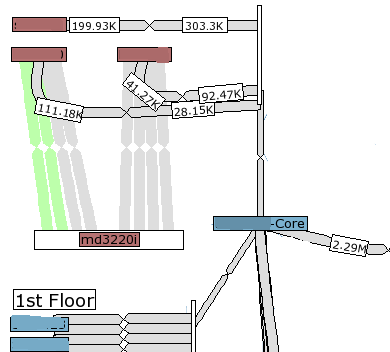

I first noticed a problem when accessing network resources was a bit slower than normal. I took a quick look at our network weathermap (combination of Cacti and weathermap plugin) and noticed that all ports coming out of our 5548 were pushing ~90 Mbps of traffic, which is definitely not normal

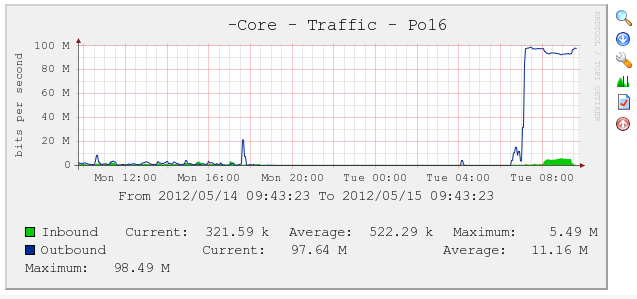

I logged into our Cacti interface and took a look at the graph for one of the interfaces on that switch:

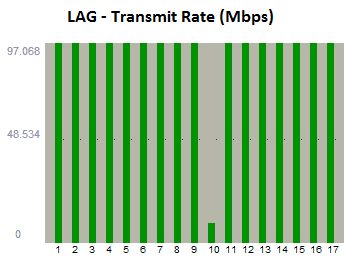

Based on that graph, I could see that the traffic started Tuesday morning, and was pretty consistent.The interesting thing is that this was happening on ALL the interfaces on the switch, including the Link Aggregated groups.

At this point I spent quite a bit of time trying to figure out whether it was a reporting problem (since the devices on the other end of those connections weren’t reporting high traffic) or an actual traffic issue. I hadn’t heard of unicast flooding before, so I didn’t immediately start looking there.

I started a Port Mirror from one of the ports that should have almost zero traffic, and Wireshark gave me hundreds of thousands of packets within a few seconds, all for devices not actually on the port I was mirroring. At this point I understood what was happening, but not why.

A quick google led me to the term “Unicast Flood” and some probably causes, but none of them really applied. My network topology is flat, a single VLAN with no STP. CPU utilization was low, and the address table only had 8 entries in it.

Wait, 8 entries? A core switch should have hundreds of entries in it’s address table right? I was experiencing a unicast flood because the switch wasn’t properly storing MAC addresses in it’s table, causing almost all the traffic to be pushed out every interface.

Back to google, and I eventually came across the following in release notes from firmware in October 2011:

| Description | User Impact | Resolution |

|

Devices stop to learn MAC addresses after 49.7 days |

After 49.7 days of operation, the device stops re-learning MAC addresses. These MACs which were previously learned will not appear in MAC address table. As a result traffic streams sent to previously learned MAC addresses are treated as unknown-unicast traffic and flooded within the VLAN. |

MAC address learning mechanism was fixed so that both learning new addresses and re-learning existing addresses are updating the MAC Address database. |

That’s one mighty big bug to be on a core switch. Turns out that I hadn’t updated the switch to the latest firmware when I first received it in February 2012 (nor was it shipped with current firmware) which is a very uncharacteristic thing for me to do.

Today I updated the firmware to the latest, and we’ll see what happens in 49.7 days.

Thanks for this info, I could see I had a problem with just the one switch, and eventually found it was address table, but didn’t have a clue what setting I had wrong to cause it. Nice to know it wasn’t my fault.

Seems to have gone away after the new firmware and reboot – did yours stay good after the 49.7 days in the end?

Hi Tom, glad that this helped you.

I have pasted the 49.7 day mark by quite a bit, and the problem has never reoccurred so if you’ve applied the new firmware you should be confident that its resolved.

Dude…

We were going crazy over intermittent total network outages for some weeks, trying to figure out what the hell was happening in our network.

After a lot of debugging, checking infrastructure, sniffing packets etc, I finally had enough ammo to do a search on Google that hit this article.

Exactly in the same boat, solved by upgrading firmware on our switches.

Thanks so much for this write up!

Samy

Samy, happy to help. It is a pretty obscure issue with devastating consequences. I realized I hadn’t updated the post to say that this was actually my problem; I have done so now.