I’m currently building a container on a Windows Server Core base image with IIS. The intention will be to run this within Azure Kubernetes Service (AKS), on Windows node pools.

A very useful resource in understanding the IIS concepts discussed in this post comes from Octopus: https://octopus.com/blog/iis-powershell#recap-iis-theory

One of the challenges I’m working with is the desire to meet both these requirements:

- Able to always place our application in a consistent and standard path (like c:\app)

- Need to be able to serve the app behind customizable virtual paths

- For example, /env/app/webservice or /env/endpoint

- These virtual paths should be specified at runtime, not in the container build (to reduce the number of unique containers)

- A unique domain cannot be required for each application

One of the thoughts is that while testing the application locally, I want to be able to reach the application at the root path (i.e. http://localhost:8080/) but when put together in the context of a distributed system, I want to serve this application behind a customizable path.

In AKS, using the ingress-nginx controller, I can use the “rewrite-target” annotation in order to have my ingress represent the virtual path while maintaining the application at the root of IIS in the container. However, this quickly falls down when various applications are used that might have non-relative links for stylesheets and javascript includes.

One idea was to place the application in the root (c:\inetpub\wwwroot) and then add a new Application on my virtual path pointing to the same physical path. However, this caused problems with duplicate web.config being recognized because it was picked up from the physical path at the root Application and my virtual path Application. This could be mitigated in the web.config with the use of “<location inheritInChildApplications=”false”>” tags, but I also realized I don’t need BOTH requirements to be available at the same time. If a variable virtual path is passed into my container, I don’t need the application served at the root.

With this in mind, I set about creating logic like this:

- In the Dockerfile, place the application at c:\app

- If the environment variable “Virtual Path” exists

- Create an IIS Application pointing at the supplied Virtual Path, with a physical path of c:\app

- else

- Change the physical path of “Default Web Site” to c:\app

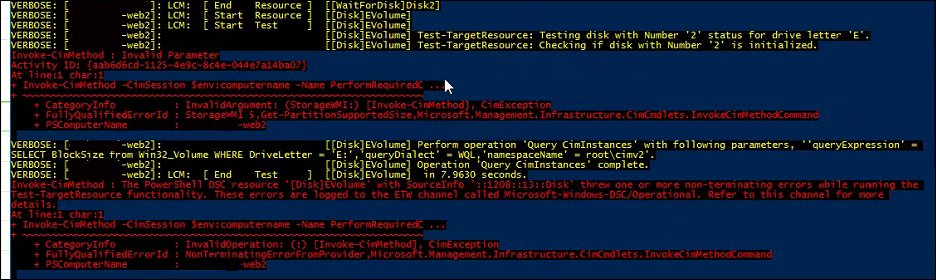

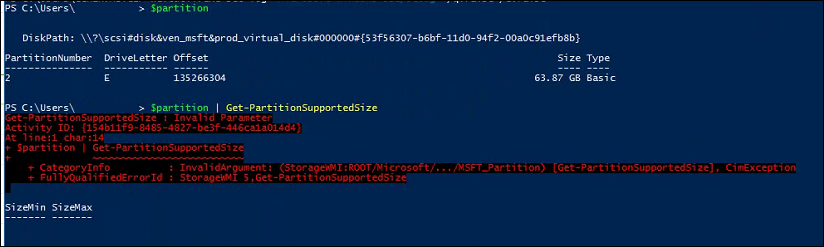

I tested this in the GUI on a Windows Server 2019 test virtual machine, and it appeared to work for my application just fine. However, when I tested using PowerShell (intending to move functional code into my docker run.ps1 script), unexpected errors occurred.

Here’s what I was attempting:

New-WebVirtualDirectory -Name "envtest/app1/webservice" -Site "Default Web Site" -PhysicalPath "C:\inetpub\wwwroot"

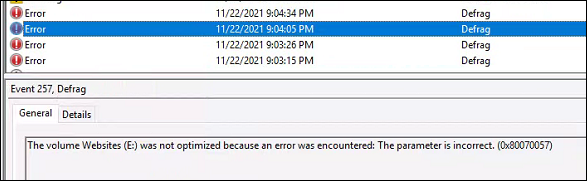

And here is the error it produced for me:

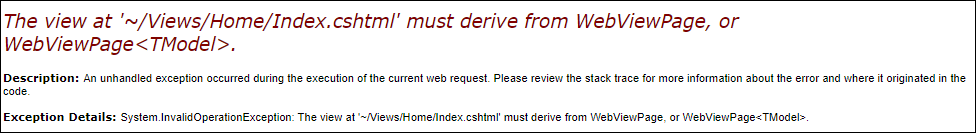

Interestingly, displaying straight HTML within this virtual path for the Application works just fine – it is only the MVC app that has an error.

The application I’m testing with is a dotnet MVC application, but none of the common solutions to this problem are relevant – the application works just fine at the root of a website, just not when applied under a virtual path.

Using the context from the Octopus link above, I began digging a little deeper and testing. Primarily targeting the ApplicationHost.config file located at “C:\windows\system32\inetsrv\Config”.

When I manually created my pathing in the GUI that was successful (creating each virtual subdir), the structure within the Site in this config file looked like this:

<site name="Default Web Site" id="1">

<application path="/">

<virtualDirectory path="/" physicalPath="%SystemDrive%\inetpub\wwwroot" />

<virtualDirectory path="/envtest" physicalPath="%SystemDrive%\inetpub\wwwroot" />

<virtualDirectory path="/envtest/app1" physicalPath="%SystemDrive%\inetpub\wwwroot" />

</application>

<application path="envtest/app1/webservice" applicationPool="DefaultAppPool">

<virtualDirectory path="/" physicalPath="C:\inetpub\wwwroot" />

</application>

<bindings>

<binding protocol="http" bindingInformation="*:80:" />

</bindings>

<logFile logTargetW3C="ETW" />

</site>

However, when I used the PowerShell example above, this is what was generated:

<site name="Default Web Site" id="1">

<application path="/">

<virtualDirectory path="/" physicalPath="%SystemDrive%\inetpub\wwwroot" />

</application>

<application path="envtest/app1/webservice" applicationPool="DefaultAppPool">

<virtualDirectory path="/" physicalPath="C:\inetpub\wwwroot" />

</application>

<bindings>

<binding protocol="http" bindingInformation="*:80:" />

</bindings>

<logFile logTargetW3C="ETW" />

</site>

It seems clear that while IIS can serve content under the virtual path I created, MVC doesn’t like the missing virtual directories.

When I expanded my manual PowerShell implementation to look like this, then the application began to work without error:

New-WebVirtualDirectory -Name "/envtest" -Site "Default Web Site" -PhysicalPath "C:\inetpub\wwwroot" New-WebVirtualDirectory -Name "/envtest/app1" -Site "Default Web Site" -PhysicalPath "C:\inetpub\wwwroot" New-WebApplication -Name "/envtest/app1/webservice" -PhysicalPath "C:\app\" -Site "Default Web Site" -ApplicationPool "DefaultAppPool"

I could then confirm that my ApplicationHost.config file matched what was created in the GUI.

The last piece of this for me was turning a Virtual Path environment variable that could contain any kind of pathing, into the correct representation of IIS virtual directories and applications.

Here’s an example of how I’m doing that:

if (Test-Path "ENV:VirtualPath")

{

# Trim the start in case a prefix forwardslash was supplied

$ENV:VirtualPath = $ENV:VirtualPath.TrimStart("/")

Write-Host "Virtual Path is passed, will configure IIS web application"

# We have to ensure the Application/VirtualDirectory in IIS gets created properly in the event of multiple elements in the path

# Otherwise IIS won't serve some applications properly, like ASP.NET MVC sites

Import-Module WebAdministration

# for each item in the Virtual Path, excluding the last Leaf

foreach ($leaf in 0..($ENV:VirtualPath.Split("/").Count-2)) { # minus 1 for 0-based counting, minus 2 for dropping the last leaf

if ($leaf -eq 0){

# Check and see if we're the first index of the VirtualPath, and if so just use it

$usepath = $ENV:VirtualPath.Split("/")[$leaf]

} else {

# If not first index, go through all previous index and concat

$usepath = [string]::Join("/",$ENV:VirtualPath.Split("/")[0..$leaf])

}

New-WebVirtualDirectory -Name "$usepath" -Site "Default Web Site" -PhysicalPath "C:\inetpub\wwwroot" # Don't specify Application, default to root

}

# Create Application with the full Virtual Path (making last element effective)

New-WebApplication -Name "$ENV:VirtualPath" -PhysicalPath "C:\app\" -Site "Default Web Site" -ApplicationPool "DefaultAppPool" # Expect no beginning forward slash

} else {

# Since no virtual path was passed, we want Default Web Site to point to C:\app

Set-ItemProperty -Path "IIS:\Sites\Default Web Site" -name "physicalPath" -value "C:\app\"

}