Today while exploring the Azure Kubernetes Service docs, specifically looking at Storage, I came across a note about StorageClasses:

You can create a StorageClass for additional needs using kubectl

This combined with the description of the default StorageClasses for Managed Disks being Premium and Standard SSD led me to question “what if I want a Standard HDD for my pod?”

This is absolutely possible!

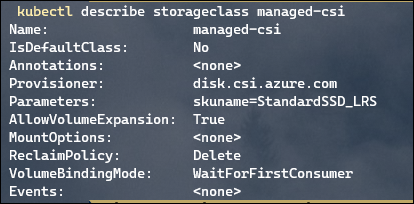

First I took a look at the parameters for an existing StorageClass, the ‘managed-csi’:

While the example provided in the link above uses the old ‘in-tree’ methods of StorageClasses, this gave me the proper Provisioner value to use the Cluster Storage Interface (CSI) method.

I created a yaml file with these contents:

kind: StorageClass apiVersion: storage.k8s.io/v1 metadata: name: managed-csi-hdd provisioner: disk.csi.azure.com reclaimPolicy: Retain allowVolumeExpansion: True volumeBindingMode: WaitForFirstConsumer parameters: skuname: StandardHDD_LRS |

In reality, I took a guess at the “skuname” parameter here, replacing the “StandardSSD_LRS” with “StandardHDD_LRS”. Having used Terraform before with Managed Disk sku’s I figured this wasn’t going to be valid, but I wanted to see what happened.

Then I performed a ‘kubectl apply -f filename.yaml’ to create my StorageClass. This worked without any errors.

To test, I created a PersistentVolumeClaim, and then a simple Pod, with this yaml:

apiVersion: v1 kind: PersistentVolumeClaim metadata: name: test-hdd-disk spec: accessModes: - ReadWriteOnce storageClassName: managed-csi-hdd resources: requests: storage: 5Gi --- kind: Pod apiVersion: v1 metadata: name: teststorage-pod spec: nodeSelector: "kubernetes.io/os": linux containers: - name: teststorage image: mcr.microsoft.com/oss/nginx/nginx:1.15.5-alpine volumeMounts: - mountPath: "/mnt/azurehdd" name: hddvolume volumes: - name: hddvolume persistentVolumeClaim: claimName: test-hdd-disk |

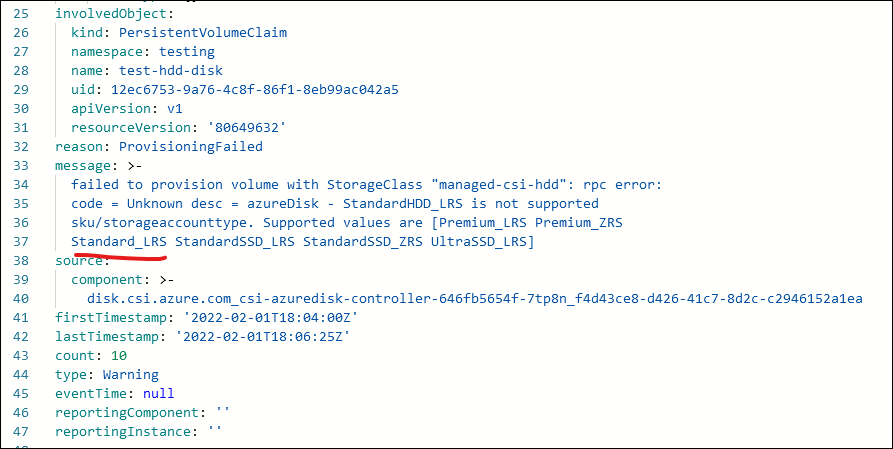

After applying this with kubectl, my PersistentVolumeClaim was in a Pending state, and the Pod wouldn’t create. I looked at the Events of my PersistentVolumeClaim, and found an error as expected:

This is telling me my ‘skuname’ value isn’t valid and instead I should be using a supported type like “Standard_LRS”.

Using kubectl I deleted my Pod, PersistentVolumeClaim, and StorageClass, modified my yaml, and re-applied.

This time, the claim was created successfully, and a persistent volume was dynamically generated. I can see that disk created as the correct type in the Azure Portal listing of disks:

The Supported Values in that error message also tells me I can create ZRS-enabled StorageClasses, but only for Premium and StandardSSD managed disks.

Here’s the proper functioning yaml for the StorageClass, with the skuname fixed:

kind: StorageClass apiVersion: storage.k8s.io/v1 metadata: name: managed-csi-hdd provisioner: disk.csi.azure.com reclaimPolicy: Retain allowVolumeExpansion: True volumeBindingMode: WaitForFirstConsumer parameters: skuname: Standard_LRS |