One of my Packer builds for a Windows image is using AzCopy to download files from Azure blob storage. In some circumstances I’ve had issues where the AzCopy “copy” command fails with a Go error, like this:

2022/01/06 10:00:02 ui: hyperv-vmcx: Job e1fcf7c7-f32e-d247-79aa-376ef5d49bd6 has started 2022/01/06 10:00:02 ui: hyperv-vmcx: Log file is located at: C:\Users\cxadmin\.azcopy\e1fcf7c7-f32e-d247-79aa-376ef5d49bd6.log 2022/01/06 10:00:02 ui: hyperv-vmcx: 2022/01/06 10:00:06 ui error: ==> hyperv-vmcx: runtime: VirtualAlloc of 8388608 bytes failed with errno=1455 2022/01/06 10:00:06 ui error: ==> hyperv-vmcx: fatal error: out of memory 2022/01/06 10:00:06 ui error: ==> hyperv-vmcx: 2022/01/06 10:00:06 ui error: ==> hyperv-vmcx: runtime stack: 2022/01/06 10:00:06 ui error: ==> hyperv-vmcx: runtime.throw(0xbeac4b, 0xd) 2022/01/06 10:00:06 ui error: ==> hyperv-vmcx: /opt/hostedtoolcache/go/1.16.0/x64/src/runtime/panic.go:1117 +0x79 2022/01/06 10:00:06 ui error: ==> hyperv-vmcx: runtime.sysUsed(0xc023d94000, 0x800000) 2022/01/06 10:00:06 ui error: ==> hyperv-vmcx: /opt/hostedtoolcache/go/1.16.0/x64/src/runtime/mem_windows.go:83 +0x22e 2022/01/06 10:00:06 ui error: ==> hyperv-vmcx: runtime.(*mheap).allocSpan(0x136f960, 0x400, 0xc000040100, 0xc000eb9b00) 2022/01/06 10:00:06 ui error: ==> hyperv-vmcx: /opt/hostedtoolcache/go/1.16.0/x64/src/runtime/mheap.go:1271 +0x3b1 2022/01/06 10:00:06 ui error: ==> hyperv-vmcx: runtime.(*mheap).alloc.func1() 2022/01/06 10:00:06 ui error: ==> hyperv-vmcx: /opt/hostedtoolcache/go/1.16.0/x64/src/runtime/mheap.go:910 +0x5f 2022/01/06 10:00:06 ui error: ==> hyperv-vmcx: runtime.systemstack(0x0) 2022/01/06 10:00:06 ui error: ==> hyperv-vmcx: /opt/hostedtoolcache/go/1.16.0/x64/src/runtime/asm_amd64.s:379 +0x6b 2022/01/06 10:00:06 ui error: ==> hyperv-vmcx: runtime.mstart() 2022/01/06 10:00:06 ui error: ==> hyperv-vmcx: /opt/hostedtoolcache/go/1.16.0/x64/src/runtime/proc.go:1246

Notice the “fatal error: out of memory” there.

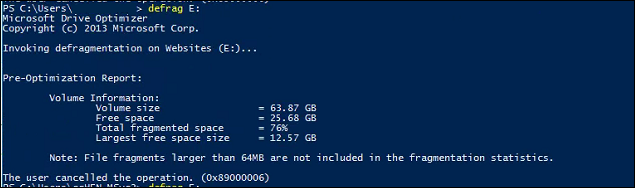

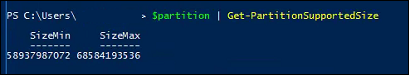

I had already set the AzCopy environment variable AZCOPY_BUFFER_GB to 1GB, and I also increased my pagefile size (knowing Windows doesn’t always grow it upon demand reliably) but these didn’t improve it.

Then I stumbled upon this GitHub issue from tomconte: https://github.com/Azure/azure-storage-azcopy/issues/781

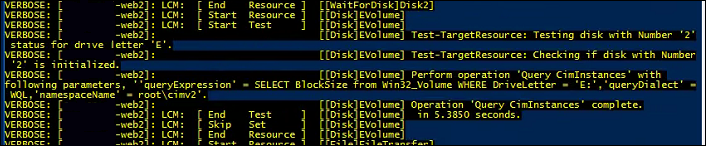

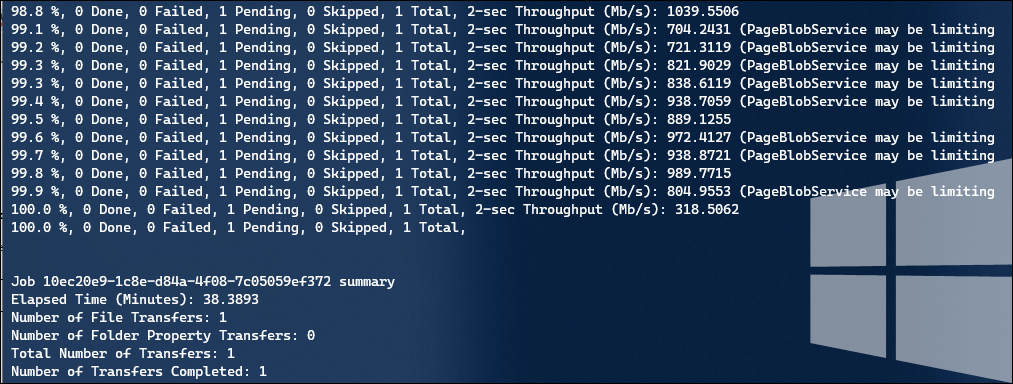

I added this into my Packer build before AzCopy gets called, and it seems to have resolved my problem.