I recently had a need to benchmark some Azure VMs, as I’m looking at Azure Storage Fuse and want to evaluate where bottlenecks in performance might be. I’m mostly saving this as a reference so I can come back to it again in the future.

The ‘fio’ tool can be used to perform this benchmarking. I found this originally from an ArsTechnica article.

First, need to create a profile that fio will use. I created a few different ones for different scenarios; mostly around the disk target based on the directory attribute of each reader.

# Read test from P20 [global] size=1g direct=1 iodepth=8 ioengine=libaio bs=512k [reader1] rw=randread directory=/tmp [reader2] rw=randread directory=/tmp [reader3] rw=randread directory=/tmp [reader4] rw=randread directory=/tmp

I saved this file to /tmp/fioread.ini. This will give me a test reading a 1GB file with a block size of 512k and iodepth of 8 in a random pattern. 4 readers are configured to maximize throughput.

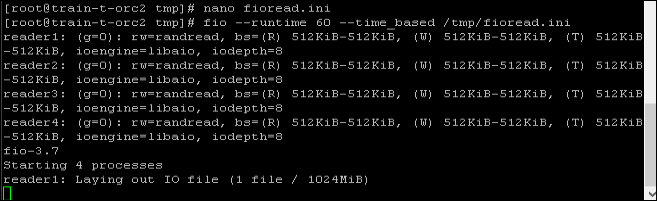

Then, I called fio with this:

fio --runtime 60 --time_based /tmp/fioread.ini

This runs the test for 60 seconds, and the –time_based ensures it runs for the full time even if the dataset size has been processed (loops back around and begins again).

When I start the command, it will layout files as defined in the .ini file you’re using

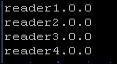

Since I have 4 readers, it produces 4 x 1 GB files in /tmp:

Then it immediately goes into the test, and after 60 seconds, produces results.

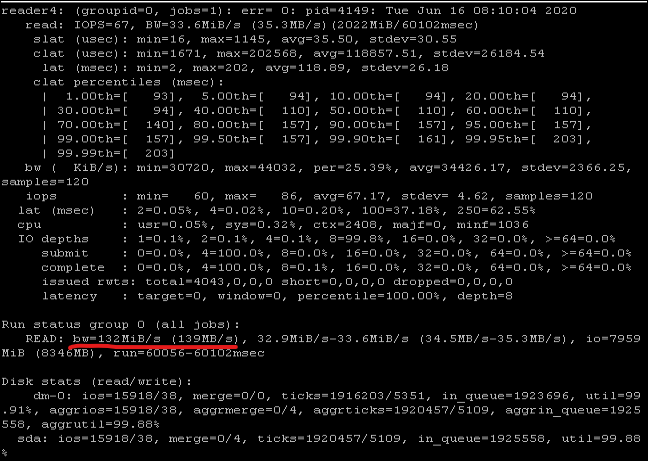

Each reader will have it’s own block of stats, with a summary at the bottom:

In this case, I’ve gotten 139 MB/s reading from a P4 (30GB) disk on an E8s_V3. The disk has a throughput of 25MB/s but can burst to 170 MB/s, and the E8s_V3 will sustain 128 MB/s on a cached disk (which I have read/write cache on). I’m not exactly sure yet why I was able to exceed 128 MB/s; perhaps part of the reads were already coming from cache from the layout, but not all of them?

I suspect that if I set cache to None on this OS disk, I’d see closer to the 170 MB/s burst, because the E8s_V3 can sustain 192 MB/s on an uncached disk.