Now that I have a reliable and programmatic way of adding a one-time maintenance window in PRTG, I wanted to be able to provide this functionality to end users responsible over their specific set of sensors. Since I have experience with C# Asp.net, and didn’t have the luxury of time learning something new (Asp.net Core, doing it entirely in Javascript, etc) I continued down that path.

Going through my requirements as I built and fine-tuned it, this is what I ended up with:

- Must use Windows Authentication

- Provide functionality to select a “logical group” that is a pre-defined set of objects for applying the maintenance window

- Be able to edit these “logical groups” outside of the code base, preferably in a simple static file

- Be able to restrict from view and selection certain “logical groups” depending on Active Directory group membership of the user viewing the site.

- Allow user to supply 2 datetime values, with validation that they exist, and end datetime is later than start datetime

- Allow user to supply conditional parameters for additional objects to include

- Display results of the operation for the user

- Email results of the operation to specific recipients

I started with this post from Jeff Murr, which detailed how to use asp.net to call a PowerShell script, and return some output. This really formed the basis of what I built.

I started by trying to use Jeff’s code as-is, as a proof-of-concept. One of the immediate problems I ran into was getting my project to recognize the system.automation reference. It kept throwing this error:

The type or namespace name 'Automation' does not exist in the namespace 'System.Management' (are you missing an assembly reference?)

I eventually came across this blog post that contained a comment with the resolution for me:

You have to add:

Microsoft PowerShell version (5/4/3/..) Reference Assembly.

You can search for "PowerShell" in NuGet Packages Manager search bar

Once I had done this, the project could be built and I had a functional method of executing PowerShell from my site.

Building out the framework of the site was simple, and I utilized some new learning on CSS FlexBox to layout my conditional panels as I wanted to.

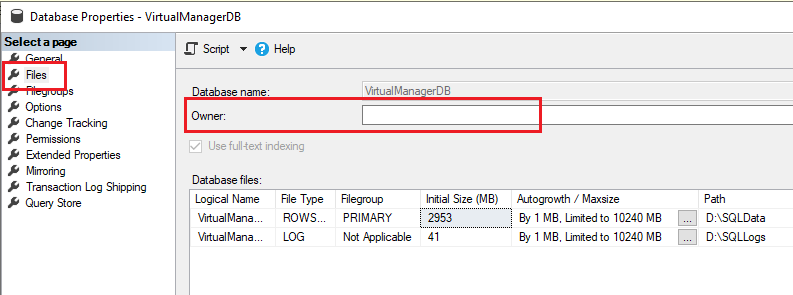

I decided to use an XML file as the data source for my “logical grouping” of information; intending that team members will be able to simply modify and push changes without having to understand anything related to the code. The XML file looks like this:

<?xml version="1.0" standalone="yes"?>

<types>

<type Id ="0" Code ="None">

</type>

<type Id ="1" Code ="Client1">

<TimeZone>MST</TimeZone>

<emailaddress>notificationlist@domain.com,notificationlist2@domain.com</emailaddress>

</type>

<type Id ="2" Code ="Client2">

<TimeZone>MST</TimeZone>

<emailaddress>notificationlist@domain.com,notificationlist2@domain.com</emailaddress>

</type>

<type Id ="3" Code ="Client3">

<TimeZone>MST</TimeZone>

<emailaddress>notificationlist@domain.com,notificationlist2@domain.com</emailaddress>

</type>

</types>

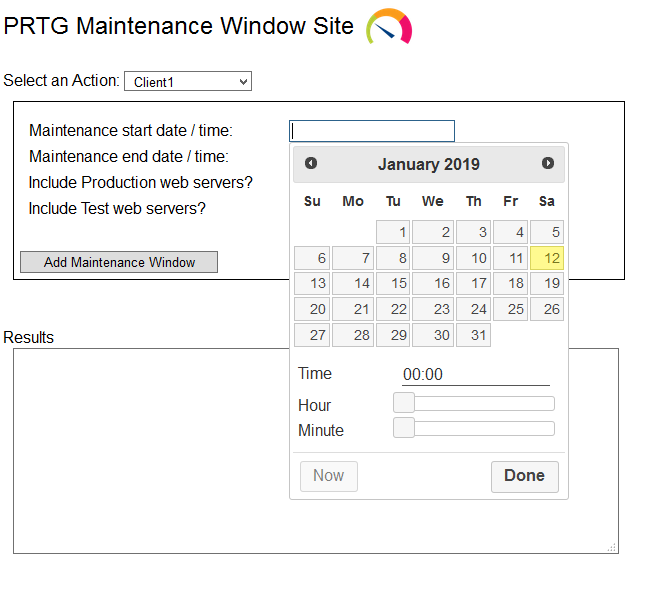

Another issue I had was with choosing a good date/time control. The out-of-the-box ones are clearly inadequate, so I decided to use a jQuery timepicker. jQuery and client-side scripts are a little unfamiliar to me, so I spent quite a bit of time tinkering to get it just right, when in reality it should have only been a brief effort.

In order to get my PowerShell script to run, and return Out-String values back to the page, I had to add: UnobtrusiveValidationMode=”None”. I did this at the top of page declaration, but it could have been done in web.config as well. Without this, when the page attempted to run the PowerShell Invoke-WebRequest, it did so under the user context of my IIS Application Pool, and it tried to run the Internet Explorer first-run wizard. Adding UnobtrusiveValidationMode bypassed this requirement.

Another unique thing I wanted to do was be able to manipulate the location of the PowerShell script and other things like disabling email notifications if I was testing during debug on my local machine. To do that, I used an IF statement to test for HttpContext.Current.Request.IsLocal.

Here’s what the site looks like:

You can find the GitHub repository of this code here: https://github.com/jeffwmiles/PrtgMaintenanceWindow