I learned something recently about calling an Azure Automation Runbook through PowerShell, particularly around how to pass multi-value entries in a single parameter.

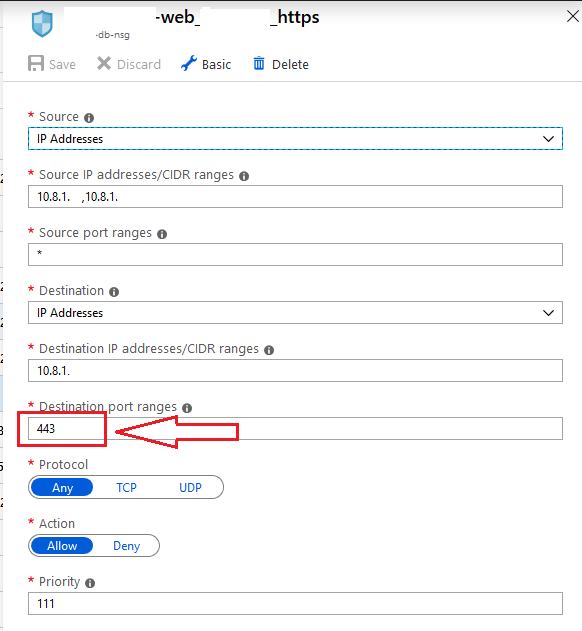

The original concept was that I have a runbook which takes input parameters of an Azure resource group, network security group, and DNS name. It takes this information, resolves the DNS name to an IP address, and then creates a rule inside the network security group for it.

Here’s a simplified example of what I started with:

param( [parameter(Mandatory=$false)] [string]$nsggroup = "*", [parameter(Mandatory=$false)] [string]$rulename = "source_dest_port", [parameter(Mandatory=$false)] [string]$rgname = "*-srv-rg", [parameter(Mandatory=$false)] [string]$endpointfqdn = "endpoint.vendor.com" ) $dnstoip = [System.Net.Dns]::GetHostAddresses("$endpointfqdn") $subscriptions = Get-AzSubscription $nsgs = Get-AzNetworkSecurityGroup -ResourceGroupName "$rgname" -Name "$nsggroup" ForEach($nsg in $nsgs) { $nsgname = $nsg.name $nsg | Add-AzNetworkSecurityRuleConfig -Access Allow -DestinationAddressPrefix $dnstoip.IPAddressToString -DestinationPortRange 443 -Direction Outbound -name $rulename -Priority $priority -SourceAddressPrefix * -SourcePortRange * -Protocol * | Set-AzNetworkSecurityGroup | out-null } |

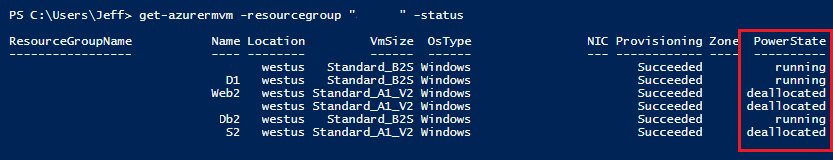

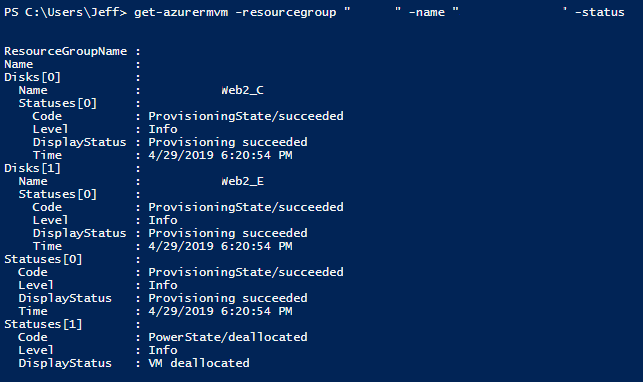

The problem with this is once it took it to a production environment, I needed more flexibility on where the rule got applied, without having to create many instances of the scheduled runbook linked to parameters.

The key was that I wanted to pass in a list of network security group names, and have the runbook do a ForEach on that list.

The primary change in parameters was to make $nsggroup an [array] type like this:

[array]$nsggroup |

['nsg1','nsg2'] |

This syntax and learning originally came from this doc link which states:

- If a runbook parameter takes an [array] or [object] type, then these must be passed in JSON format in the start runbook dialog. For example:

- A parameter of type [object] that expects a property bag can be passed in the UI with a JSON string formatted like this: {“StringParam”:”Joe”,”IntParam”:42,”BoolParam”:true}.

- A parameter of type [array] can be input with a JSON string formatted like this: [“Joe”,42,true]

In order to programmatically set up the runbook along with linked schedules, I put my input into an array containing PowerShell objects like this (note the “nsggroup” value on each object):

$inputrules= @( [pscustomobject]@{ name = "dnsresolve1"; rulename = "source_dest_port"; ports = @(443); endpointfqdn = "endpoint.vendor.com"; nsggroup = @('nsg1', 'nsg2', 'nsg5', 'nsg6') }, [ps[pscustomobject]@{ name = "dnsresolve2"; rulename = "source2_dest_port"; ports = @(443); endpointfqdn = "endpoint2.vendor.com"; nsggroup = @('nsg3', 'nsg4') } ) |

In my script that does the programmatic setup, I use this array of objects to do something like this (vastly simplified):

foreach ($object in $inputrules) { Register-AzAutomationScheduledRunbook -Name $runbookname -ScheduleName "6Hours-$($object.name)" -AutomationAccountName $automationAccountName -ResourceGroupName $resourceGroupName ` -Parameters @{"nsggroup" = $object.nsggroup; "rulename" = $object.rulename; "endpointfqdn" = $object.endpointfqdn; "ports" = $object.ports; "subscriptionid" = $clientsubscriptionid } } |

You can see by using the syntax in my custom object of an array, I can pass it into the scheduled runbook as a simple parameter, and Azure Automation will take that and apply it successfully.