While working on an Azure ARM Template, particularly one which references pre-existing resources rather than ones created within the template itself, I realized I needed to do more to make my code simple with as few parameters as possible.

For example, trying to reference an existing Virtual Machine, I didn’t want to hard-code the values for it’s name, id, disks, or nics into parameters that have to be supplied as configuration data (in this case, working on a template for Azure Site Recovery).

I knew about the “Reference” function that ARM templates provide, but I was struggling with the thought that I’d have to write up all my references in a functional and deployable template before I could actually validate that I had the syntax correct.

Thankfully I came across this TechNet blog post by Stefan Stranger, which demonstrates a method of testing your functions using an output resource.

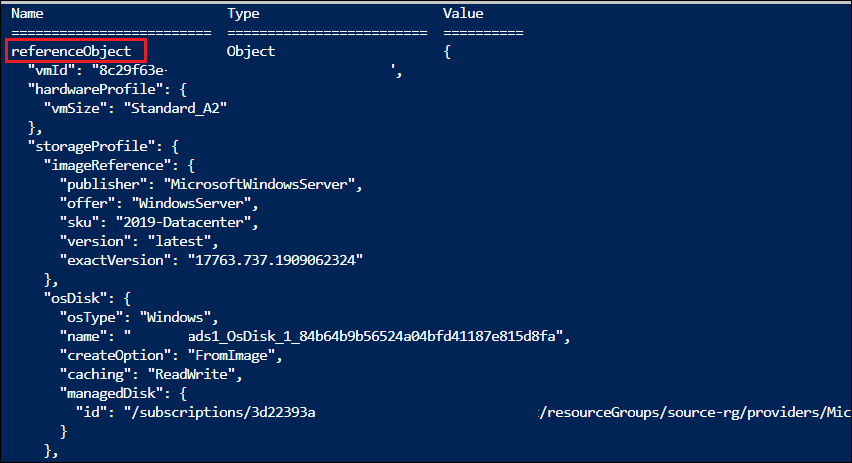

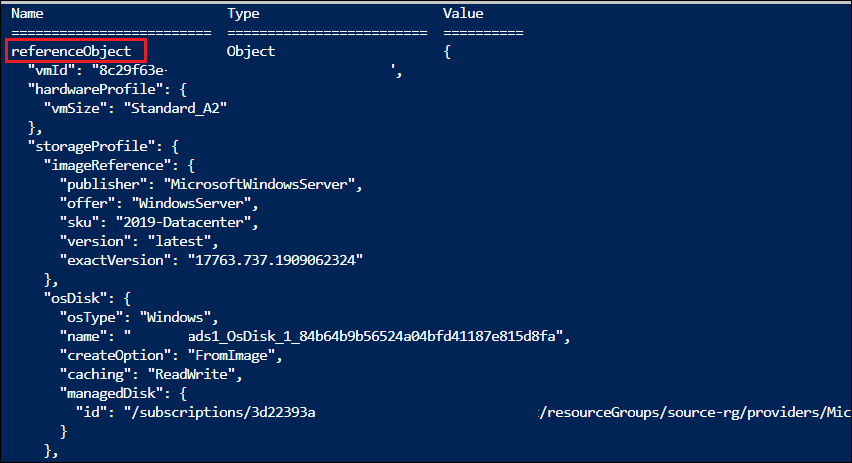

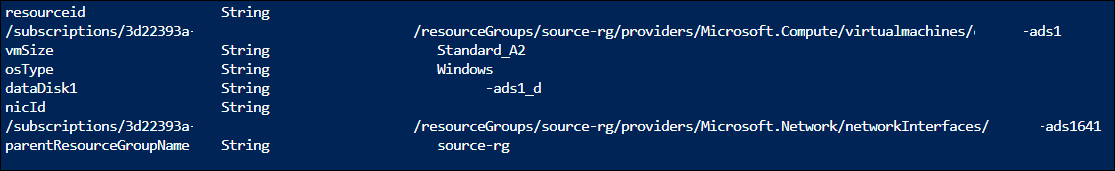

I’m not going to repeat his blog post (its a good read!), but I did make some additions to pull out the values of the reference that I wanted. In my example below, I originally started with the “referenceObject” output, reviewed it’s JSON, and then determined the additional attributes to append to get the values that I wanted.

I’ve used the additional ResourceGroup syntax of the reference function in my example because it is likely I’ll be making an ARM deployment to one resource group while referencing resources in another. I also threw in an example of the “resourceId” function, to ensure I was using it properly.

Here’s the PS1 script to use for testing, and below will show the output:

<# Testing Azure ARM Functions https://blogs.technet.microsoft.com/stefan_stranger/2017/08/02/testing-azure-resource-manager-template-functions/ https://docs.microsoft.com/en-us/azure/azure-resource-manager/resource-group-template-functions-resource#reference #>

#region Variables

$ResourceGroupName = 'source-rg'

$Location = 'WestUS2'

$subscription = "3d22393a"

#endregion

#region Connect to Azure

# Running in Azure Cloud Shell, don't need this

#Add-AzAccount

#Select Azure Subscription

Set-AzContext -SubscriptionId $subscription

#endregion

#region create Resource Group to test Azure Template Functions

If (!(Get-AzResourceGroup -name $ResourceGroupName -Location $Location -ErrorAction SilentlyContinue)) {

New-AzResourceGroup -Name $ResourceGroupName -Location $Location

}

#endregion

# region Example for if condition

$template = @'

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"vmName": {

"type": "string",

"defaultValue": "testVM1"

},

"ResourceGroup": {

"type": "string",

"defaultValue": "source-rg"

}

},

"variables": { },

"resources": [ ],

"outputs": {

"referenceObject": {

"type": "object",

"value": "[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01')]"

},

"fullReferenceOutput": {

"type": "object",

"value": "[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01', 'Full')]"

},

"resourceid": {

"type": "string",

"value": "[resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName'))]"

},

"vmSize": {

"type": "string",

"value": "[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01').hardwareprofile.vmSize]"

},

"osType": {

"type": "string",

"value": "[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01').storageProfile.osDisk.osType]"

},

"DataDisk1": {

"type": "string",

"value": "[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01').storageProfile.datadisks[0].name]"

},

"DataDisk2": {

"type": "string",

"value": "[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01').storageProfile.datadisks[1].name]"

},

"nicId": {

"type": "string",

"value": "[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01').networkProfile.networkInterfaces.id]"

}

}

}

'@

#endregion

$template | Out-File -File .\template.json -Force

#region Test ARM Template

Test-AzResourceGroupDeployment -ResourceGroupName $ResourceGroupName -TemplateFile .\template.json -OutVariable testarmtemplate

#endregion

#region Deploy ARM Template with local Parameter file

$result = (New-AzResourceGroupDeployment -ResourceGroupName $ResourceGroupName -TemplateFile .\template.json)

$result

#endregion

#Cleanup

Remove-Item .\template.json -Force |

<# Testing Azure ARM Functions https://blogs.technet.microsoft.com/stefan_stranger/2017/08/02/testing-azure-resource-manager-template-functions/ https://docs.microsoft.com/en-us/azure/azure-resource-manager/resource-group-template-functions-resource#reference #>

#region Variables

$ResourceGroupName = 'source-rg'

$Location = 'WestUS2'

$subscription = "3d22393a"

#endregion

#region Connect to Azure

# Running in Azure Cloud Shell, don't need this

#Add-AzAccount

#Select Azure Subscription

Set-AzContext -SubscriptionId $subscription

#endregion

#region create Resource Group to test Azure Template Functions

If (!(Get-AzResourceGroup -name $ResourceGroupName -Location $Location -ErrorAction SilentlyContinue)) {

New-AzResourceGroup -Name $ResourceGroupName -Location $Location

}

#endregion

# region Example for if condition

$template = @'

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"vmName": {

"type": "string",

"defaultValue": "testVM1"

},

"ResourceGroup": {

"type": "string",

"defaultValue": "source-rg"

}

},

"variables": { },

"resources": [ ],

"outputs": {

"referenceObject": {

"type": "object",

"value": "[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01')]"

},

"fullReferenceOutput": {

"type": "object",

"value": "[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01', 'Full')]"

},

"resourceid": {

"type": "string",

"value": "[resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName'))]"

},

"vmSize": {

"type": "string",

"value": "[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01').hardwareprofile.vmSize]"

},

"osType": {

"type": "string",

"value": "[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01').storageProfile.osDisk.osType]"

},

"DataDisk1": {

"type": "string",

"value": "[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01').storageProfile.datadisks[0].name]"

},

"DataDisk2": {

"type": "string",

"value": "[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01').storageProfile.datadisks[1].name]"

},

"nicId": {

"type": "string",

"value": "[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01').networkProfile.networkInterfaces.id]"

}

}

}

'@

#endregion

$template | Out-File -File .\template.json -Force

#region Test ARM Template

Test-AzResourceGroupDeployment -ResourceGroupName $ResourceGroupName -TemplateFile .\template.json -OutVariable testarmtemplate

#endregion

#region Deploy ARM Template with local Parameter file

$result = (New-AzResourceGroupDeployment -ResourceGroupName $ResourceGroupName -TemplateFile .\template.json)

$result

#endregion

#Cleanup

Remove-Item .\template.json -Force

Example reference object output:

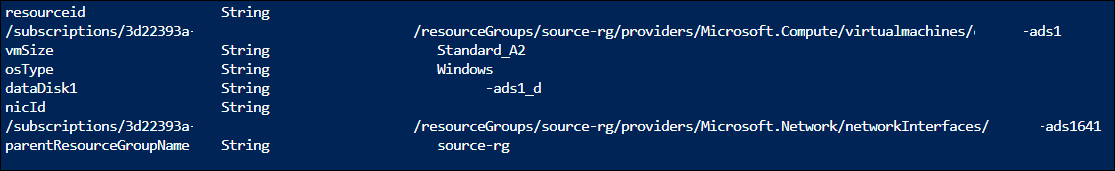

Example reference strings output:

I’m keeping a list of the common references I’m touching right now as note-taking:

[resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName'))]

[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01').hardwareprofile.vmSize]

[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01').storageProfile.osDisk.osType]

[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01').storageProfile.osDisk.Name]

[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01').storageProfile.osDisk.managedDisk.id]

[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01').storageProfile.osDisk.diskSizeGB]

[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01').storageProfile.datadisks[0].name]

[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01').storageProfile.datadisks[0].managedDisk.id]

[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01').storageProfile.datadisks[0].diskSizeGB]

[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01').networkProfile.networkInterfaces.id]

[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01', 'Full').ResourceGroupName] |

[resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName'))]

[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01').hardwareprofile.vmSize]

[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01').storageProfile.osDisk.osType]

[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01').storageProfile.osDisk.Name]

[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01').storageProfile.osDisk.managedDisk.id]

[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01').storageProfile.osDisk.diskSizeGB]

[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01').storageProfile.datadisks[0].name]

[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01').storageProfile.datadisks[0].managedDisk.id]

[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01').storageProfile.datadisks[0].diskSizeGB]

[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01').networkProfile.networkInterfaces.id]

[reference(resourceId(parameters('ResourceGroup'), 'Microsoft.Compute/virtualmachines', parameters('vmName')), '2019-07-01', 'Full').ResourceGroupName]