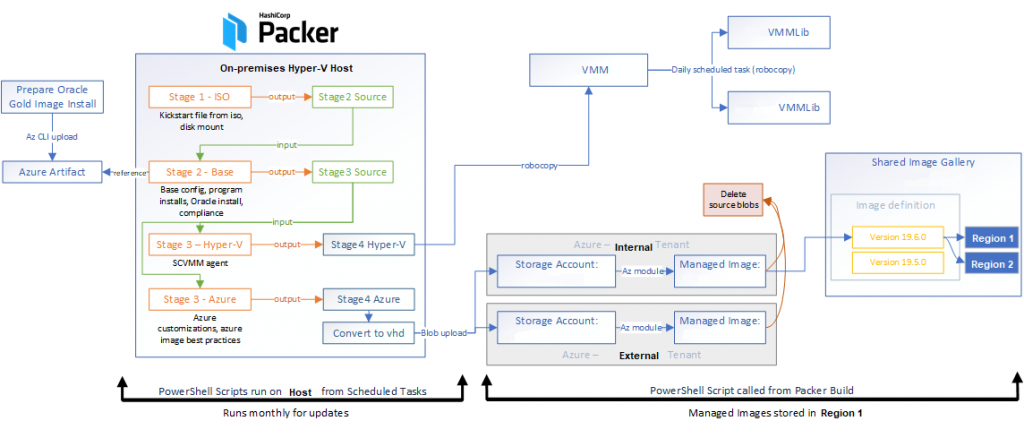

I’ve begun poking around with custom Azure managed images, using Packer as an on-premises build source. The goal is to use the same image within an on-premises test environment and in an Azure production environment, and take a small step towards immutable infrastructure.

There are lots of interesting questions around this topic that may have unique answers in different environments. Here’s a few thoughts on what I see where I am right now:

- Should I use Packer to build a traditional Hyper-V image, convert it to VHD and upload it to Azure, or directly use the Packer builder for Azure?

- Because I have the on-prem resources, am building upon a pre-existing framework where local-only images are built, and need to build images for both Hyper-V and Azure, I decided to keep it consistent rather than split the build chain off to a whole new builder.

- Should I just use the Azure Image Builder to streamline the process?

- Maybe eventually – again, I wanted to incrementally build upon the successes of existing on-premises deployments. The Image Builder service is very intriguing, and would be a next logical step once it leaves Preview.

- Why do images need to be built for on-premises in the first place? Why not native Azure resources?

- While deploying into Azure would offer a lot more flexibility and scale, there are still many reasons to maintain a local presence for resources, but it mostly boils down to financial: pre-existing CapEx investments exist vs new OpEx costs that would be realized, without the appropriate systems in place for constraining size, resource lifetime, and ultimately cost overflows

- Why build images, and not do configuration management after deploy?

- I go back and forth on this question frequently, and I have seen much conversation about it. Like most of IT, “it depends”. Using customized images that are version controlled provides the infrastructure ability to shift-left, and ensure quality is in the build repeatably and consistently. What if you’re doing post-deployment config management, and DNS isn’t available, or a service crashes halfway through, or any number of other things that can go wrong? Now there is a delay in the availability of that resource you’ve deployed, effort consumed to resolve the problem, and a lack of confidence in its quality.

-

- Immutable builds do not natively solve the problem of configuration drift post-deployment, and this is one of the big gaps that I see trying to take traditional IaaS and fit it into a more modern profile. The ‘answer’ is to monitor drift and re-build from source (cattle not pets) when it is detected, but not everyone is working with modern micro-services running in containers orchestrated centrally to achieve this. Instead, there may be an intermediary step where immutable image builds are used, along with configuration management post-deployment to watch for drift.

Once I got to a place where an image is ready, I began poring over the Microsoft Docs on managed images and Shared Image Gallery, prior to testing.

I intended on a distribution flow something like this:

Packer drops VHD in Blob storage -> Create Managed Image -> Use Shared Image Gallery definition -> Create Image Version

The documentation left me with a few unanswered questions, which I’ve outlined here:

- What if I remove the original blob, can I still use the image? Yes, you can continue to deploy the managed image without the source blob

- What if the blob gets replaced, does it update the image? No, any future deployments of the image will continue to be delivered as when the image was created

- What if I remove the source managed image, can I still use the Shared Image Gallery definition version?

- According to the guidance from Microsoft: Yes, but if you plan on adding replica regions, do not delete the source managed image. The source managed image is needed for replicating the image version to additional regions.

- What if I update the source managed image, does it update the Shared Image Gallery definition version? Mostly no, similar to the blob-to-image relationship, if you update the source managed image, the version in a SIG definition doesn’t update. What I need to test is what happens if you replace the source managed image, and then replicate an image definition version to a new region – will it contain the updates in the image?

Here’s a few other important design discoveries I’ve made along the way:

- A VM from an Azure Managed Image can only be deployed within the same Region and Subscription as the Image (i.e. if you want to re-use the image across multiple regions or subscriptions, you’ll have to create additional images to suit

- A VM from a Shared Image Gallery definition CAN be deployed outside a subscription, from any region it is replicated, as long as the authentication mechanism performing the VM deployment has RBAC over the Shared Image Gallery resource

- Microsoft says “as a best practice, we encourage you to keep the resource group, shared image gallery, image definition, and image version in the same location.” I can confirm that if the resource group you place the Shared Image Gallery in is in a different Location than the SIG itself or the image definition, there are no barriers to creating those resources or a VM from them

- Terraform AzureRM provider support (as of today at least) has limitations in managing Shared Image components:

- You cannot set properties for VM Generation on the image definitions

- Resource removal does not respect the dependencies between an image version, definition, and gallery

At the end of the day, I’ve come up with the following flow which builds and delivers my images: