July 2022 Update

After a support case with Inedo and Microsoft, this has been determined to be caused by Azure AD Application Proxy setting the Content-Length attribute to 0 for every HEAD request.

Microsoft is aware of this and has it in their backlog, along with a corresponding feedback item:

https://feedback.azure.com/d365community/idea/43a1e16f-bffe-ec11-a81b-6045bd853c94

Original Post

Just solved (kind of) an issue with Azure Kubernetes Service performing an Image Pull from a private container registry provided by Inedo ProGet.

Using AKS 1.22.4 with containderd 1.55, I’ve followed the K8s instructions to pull an image from a private registry with the creation of a secret which is then referenced in the yaml manifest.

However, when I apply the manifest, my Pod doesn’t start, ending with an ErrImagePull error.

Performing a “kubectl describe pod [podname]” shows this error:

Failed to pull image "source repo/imagename:tag": rpc error: code = InvalidArgument desc = failed to pull and unpack image "source repo/imagename:tag": unable to fetch descriptor (sha256:hash) which reports content size of zero: invalid argument

Not a lot of other insights online related to this message, but I CAN see that it comes directly from containerd source code here: containerd/handlers.go at main · containerd/containerd · GitHub

I did find one reference talking about this error and an “Azure proxy”, and my ProGet instance was exposed to the Internet over Azure AD Application Proxy. Even though I could successfully reference and pull other container images from my ProGet instance, I modified my connectivity to be direct through my firewall temporarily – this didn’t resolve the problem.

I spent a little bit of time making sure my K8s “imagePullSecrets” was correct – again was able to verify successful pull from ProGet.

On a test machine, I also verified I could perform a ‘docker login’ command against ProGet and a ‘docker pull’, which was successful with this troublesome image.

I used ‘docker image inspect [image name]’ to compare against my working image and broken image, but didn’t find anything conclusive.

I knew this SAME image worked from Azure Container Registry (ACR), so I performed some docker tag and push commands to get the image into my ProGet:

docker tag myrepo.azurecr.io/path/landingpage:tag privaterepo.domain.com/path/landing-page:tag docker push privaterepo.domain.com/path/landing-page:tag

My thought was, “same image, should work!” but I still received the same problem.

Wanting to look a little deeper, I tried to learn how to see a bit more interaction when my AKS cluster was attempting the image pull. This is when I came across Debugging K8s Nodes with crictl.

Using the knowledge of this tool, as well as Microsoft Docs on Connecting to AKS nodes, I was able to establish a privileged container and gain access to commands against my node with “chroot /host”.

I hit a roadblock trying to use crictl help docs to pull an image, as it continuously gave me a 403 Forbidden error despite proper credentials. But then I learned about “ctr”, which I discovered was already usable on my nodes from my privileged container!

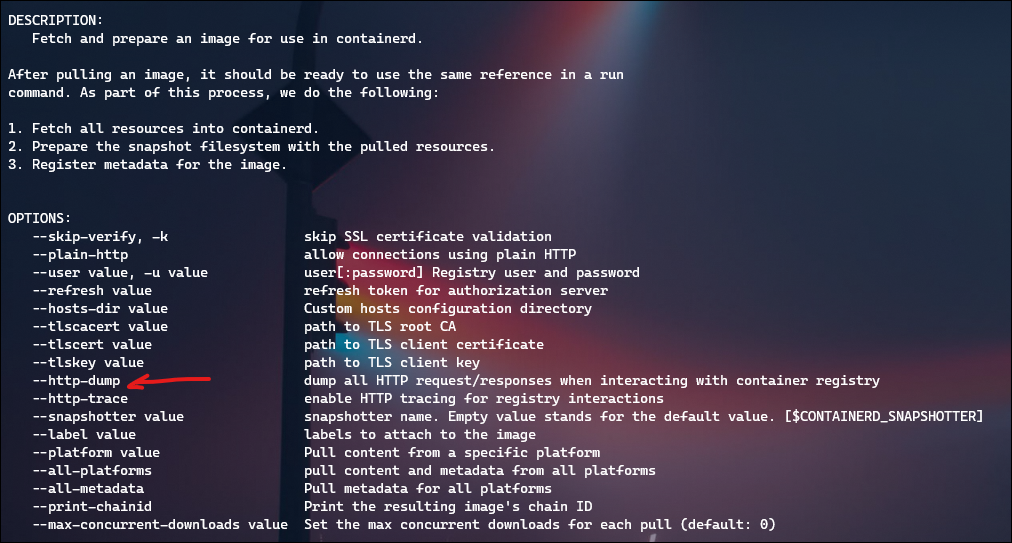

Now we’re in business – the command “ctr image pull” has a flag for –http-dump which gave me a lot more information

I performed image pulls for my working image and broken image, and noticed the broken one was a LOT more chatty – multiple HTTP requests that seemed to be repeating.

Here’s the requests made for the working image:

- HEAD /v2/[image path]/[image name]/manifests/[tag]

-

POST /v2/_auth

-

GET /v2/_auth?scope=repository%3A[image path]%2F[image name]%3Apull&service=[repo name]

-

HEAD /v2/[image path]/[image name]/manifests/[tag]

And this is what I saw for the broken image:

- HEAD /v2/[image path]/[image name]/manifests/[tag]

- POST /v2/_auth

- GET /v2/_auth?scope=repository%3A[image path]%2F[image name]%3Apull&service=[repo name]

- GET /v2/[image path]/[image name]/manifests/sha256:[hash]

- POST /v2/_auth

- GET /v2/_auth?scope=repository%3A[image path]%2F[image name]%3Apull&service=[repo name]

- GET /v2/[image path]/[image name]/manifests/sha256:[hash]

- GET /v2/[image path]/[image name]/blobs/sha256:[hash]

INFO[0001] Content-Type: application/vnd.docker.distribution.manifest.v2+json

And this content (truncated):

INFO[0001] "schemaVersion": 2,

INFO[0001] "mediaType": "application/vnd.docker.distribution.manifest.v2+json",

INFO[0001] "config": {

INFO[0001] "mediaType": "application/vnd.docker.container.image.v1+json",

INFO[0001] "digest": "sha256:hash1"

INFO[0001] },

INFO[0001] "layers": [

INFO[0001] {

INFO[0001] "mediaType": "application/vnd.docker.image.rootfs.diff.tar.gzip",

INFO[0001] "size": 2814559,

INFO[0001] "digest": "sha256:hash2"

INFO[0001] },

INFO[0001] {

INFO[0001] "mediaType": "application/vnd.docker.image.rootfs.diff.tar.gzip",

INFO[0001] "size": 7341522,

INFO[0001] "digest": "sha256:hash3"

INFO[0001] },

I had seen this output before, in the metadata information provided by both ProGet, and ACR, so I did a comparison of my broken image between ProGet and ACR, and also my working image in ProGet.

What I found different in my broken image in ProGet was the “Size” attribute missing within the config property of the docker manifest!

ACR:

{

"schemaVersion": 2,

"mediaType": "application/vnd.docker.distribution.manifest.v2+json",

"config": {

"mediaType": "application/vnd.docker.container.image.v1+json",

"size": 8486,

"digest": "sha256:hash"

},

}

ProGet:

{

"schemaVersion": 2,

"mediaType": "application/vnd.docker.distribution.manifest.v2+json",

"config": {

"mediaType": "application/vnd.docker.container.image.v1+json",

"digest": "sha256:samehash"

},

}

Even though I simply re-tagged the ACR image and pushed it into ProGet, that somehow dropped this size attribute.

I thought a short term fix would be to manually edit the docker manifest in ProGet to add the size property, but after finding and modifying the file (D:\ProGet\Packages\.docker\F7\manifests\sha256) it hadn’t updated in the ProGet GUI and I didn’t dig into that further.

Instead I re-checked my tests from the docker CLI, with “docker manifest inspect [image name]:[tag]”. What I found was that the ACR image had the size attribute, but somehow the image I re-tagged and pushed to ProGet did not!

So I re-tagged my ACR image again (call it “jeff2”), and then re-pushed to ProGet; this time, the size attribute stayed! I was then able to successfully perform a ‘ctr image pull’ command, and my ‘kubectl apply’ worked!

Here’s what I think happened:

- First image push (done by teammate) to ProGet occurred over the Internet, behind the Azure AD App Proxy, which stripped the size attribute from the docker manifest

- Perhaps similar in a way to this bug worked on by the wikimedia team with their varnish proxy?

- Initial testing, including docker CLI re-tag and push done when ProGet was still behind the Azure AD App Proxy

- Assume docker client doesn’t care about missing size attribute, but containerd does

- Assume actual containerd image pull doesn’t mind coming from Azure AD App Proxy (why my other images worked from ProGet originally)

- After switching to direct HTTPS, pushing an image to ProGet retains the size attribute.

One final test to explain my re-tagging behavior. I re-instated ProGet behind the Azure AD App Proxy, and then re-pushed my “jeff2” tagged image (not a pull from ProGet, just a push) and in both my workstation, and in ProGet, the size attribute was gone!

- This confirms my assumption that re-tagging and pushing to ProGet not only dropped the attribute in ProGet, but my local docker image cache too.

For now, takeaway is don’t put ProGet behind Azure AD App Proxy! I’ve got a support case open with Inedo that I’ll relay this information to.

>Azure AD Application Proxy setting the Content-Length attribute to 0 for every HEAD request.

>Microsoft is aware of this and has it in their backlog, along with a corresponding feedback item:

What an example of closed-source software’s downside! In the OSS world, this would be a GitHub Issue, and then you yourself could file the PR to fix it!