I’m in the process of configuring DPM to back up my Hyper-V environment, which resides on an EqualLogic PS6500ES SAN.

It was during this that I encountered an issue with the DPM consistency check for a 3TB VM locking up every other VM on my cluster, due to high write latencies. During this period I couldn’t even get useful stats out of the EqualLogic because SANHQ wouldn’t communicate with it and the Group Manager live sessions would fail to initialize.

After some investigation, I did the following on all my Hyper-V hosts and my DPM host:

– Disabled “Large Send Offload” for every NIC

– Set “Receive Side Scaling” queues to 8

– Disabled the Nagle algorithm for iSCSI NICs (http://social.technet.microsoft.com/wiki/contents/articles/7636.iscsi-and-the-nagle-algorithm.aspx)

– Update Broadcom firmware and drivers

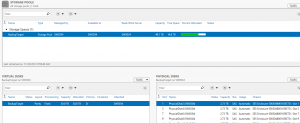

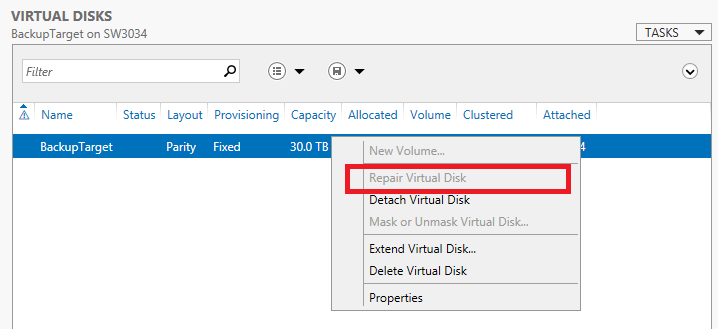

Following these changes, I still see very high write latency on my backup datastore volume, but the other volumes operate perfectly.